scikit-learn: machine learning in Python

Easy-to-use and general-purpose machine learning in Python

scikit-learn is a Python module integrating classic machine learning algorithms in the tightly-knit scientific Python world (numpy, scipy, matplotlib). It aims to provide simple and efficient solutions to learning problems, accessible to everybody and reusable in various contexts: machine-learning as a versatile tool for science and engineering.

License: Open source, commercially usable: BSD license (3 clause)

Documentation for scikit-learn version 0.11-git. For other versions and printable format, see Documentation resources.

User Guide¶

- 1. Installing scikit-learn

- 2. Tutorials: From the bottom up with scikit-learn

- 3. Supervised learning

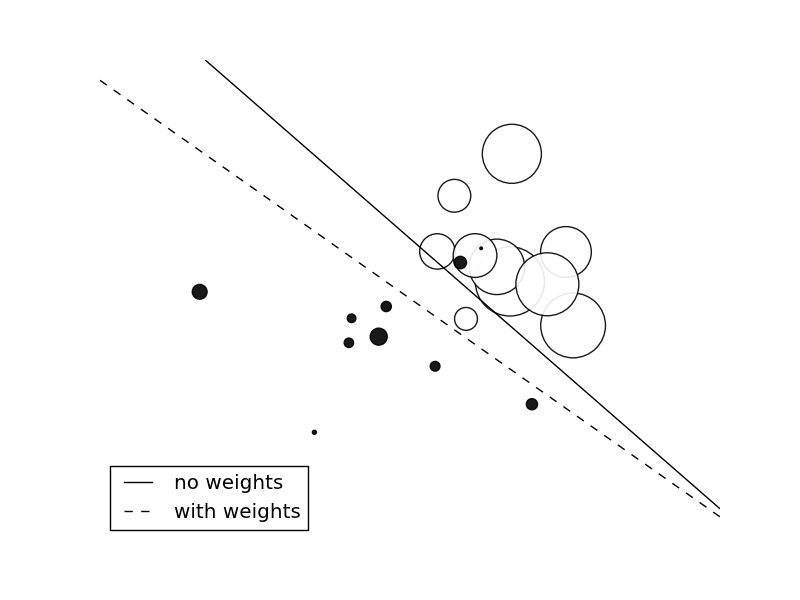

- 3.1. Generalized Linear Models

- 3.2. Support Vector Machines

- 3.3. Stochastic Gradient Descent

- 3.4. Nearest Neighbors

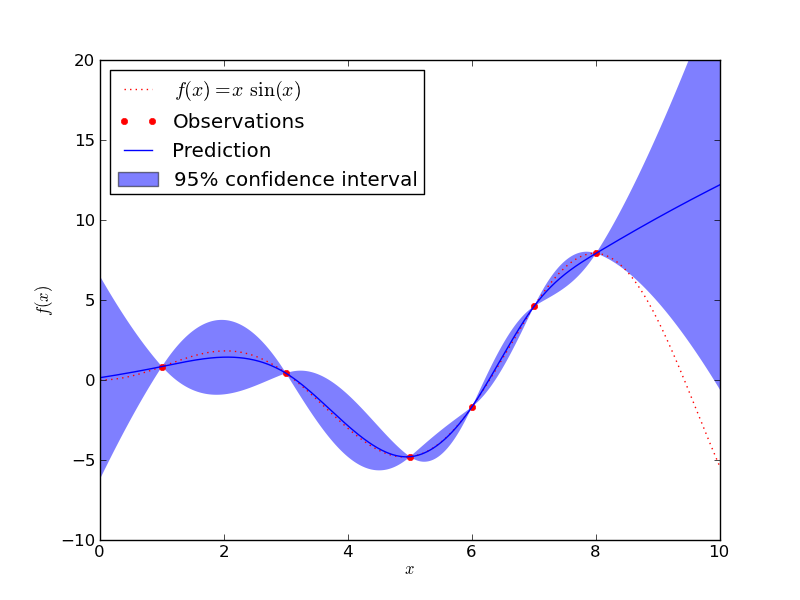

- 3.5. Gaussian Processes

- 3.6. Partial Least Squares

- 3.7. Naive Bayes

- 3.8. Decision Trees

- 3.9. Ensemble methods

- 3.10. Multiclass and multilabel algorithms

- 3.11. Feature selection

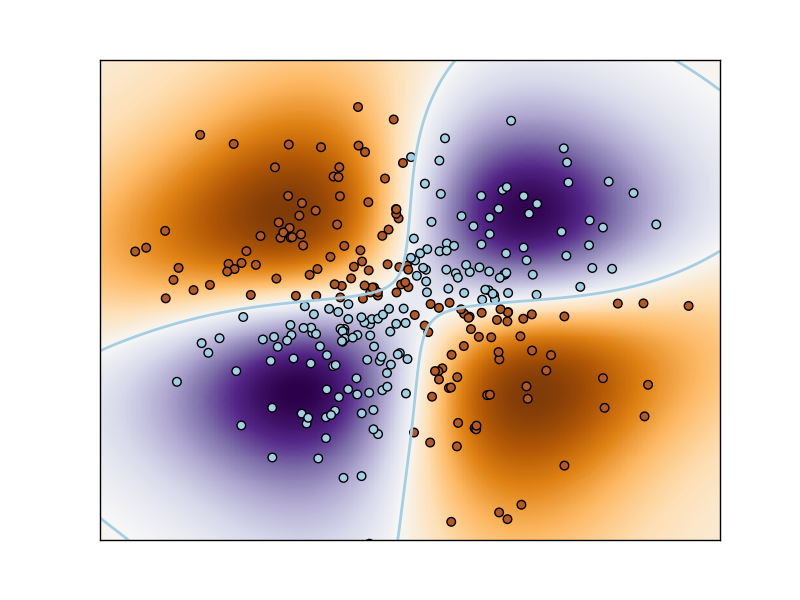

- 3.12. Semi-Supervised

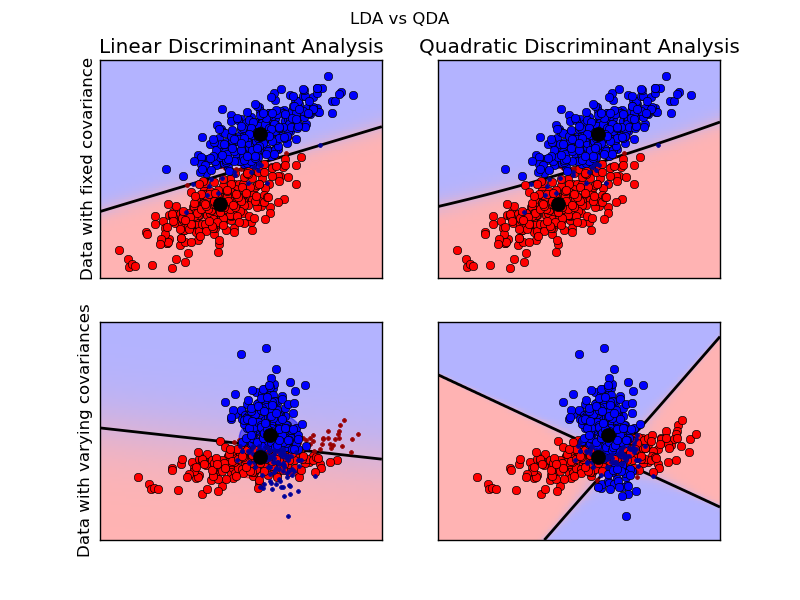

- 3.13. Linear and Quadratic Discriminant Analysis

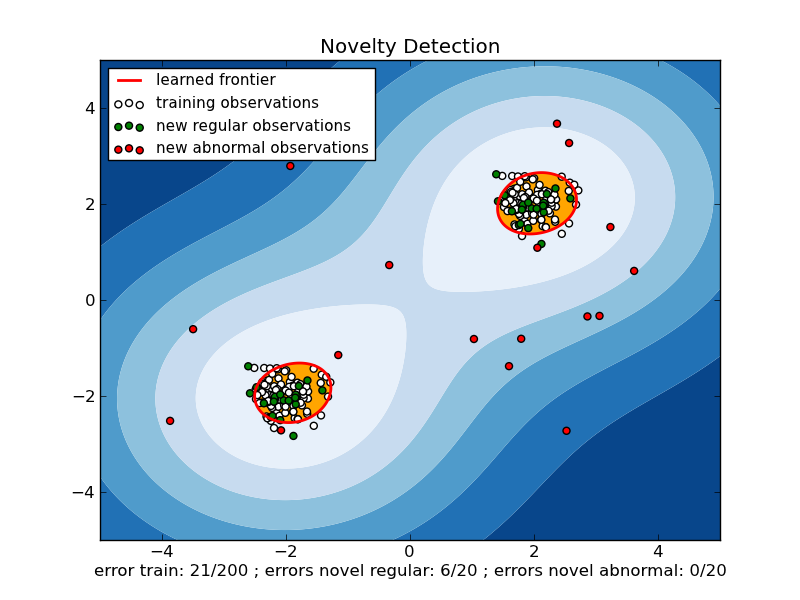

- 4. Unsupervised learning

- 5. Model Selection

- 6. Dataset transformations

- 7. Dataset loading utilities

- 7.1. General dataset API

- 7.2. Toy datasets

- 7.3. Sample images

- 7.4. Sample generators

- 7.5. Datasets in svmlight / libsvm format

- 7.6. The Olivetti faces dataset

- 7.7. The 20 newsgroups text dataset

- 7.8. Downloading datasets from the mldata.org repository

- 7.9. The Labeled Faces in the Wild face recognition dataset

- 8. Reference

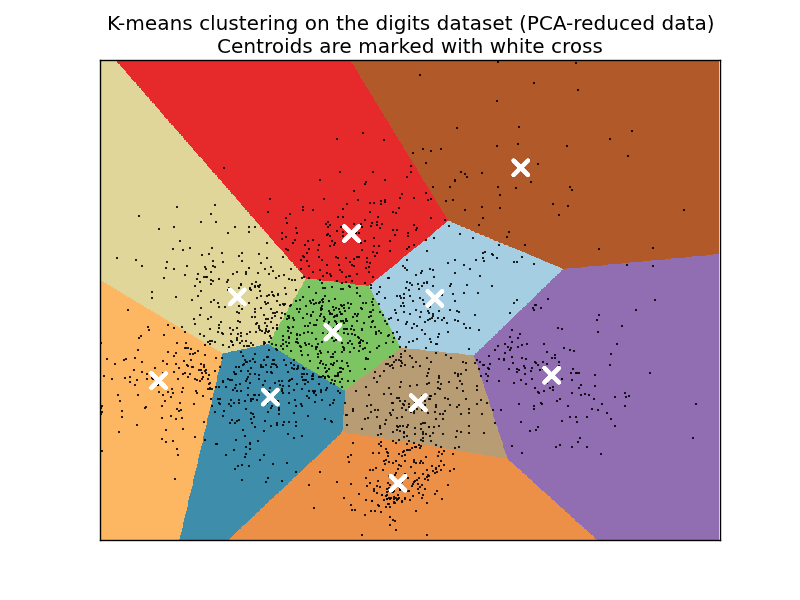

- 8.1. sklearn.cluster: Clustering

- 8.2. sklearn.covariance: Covariance Estimators

- 8.3. sklearn.cross_validation: Cross Validation

- 8.4. sklearn.datasets: Datasets

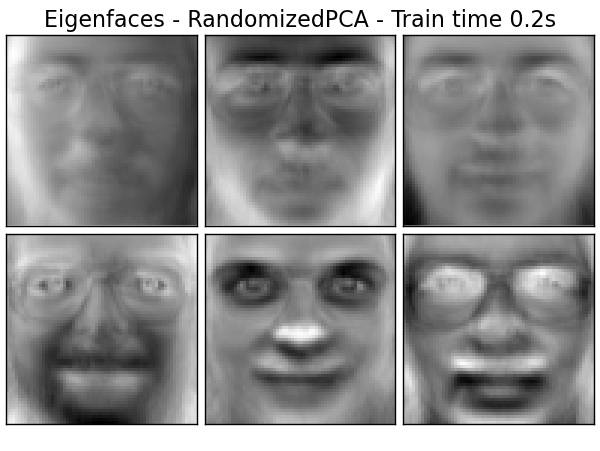

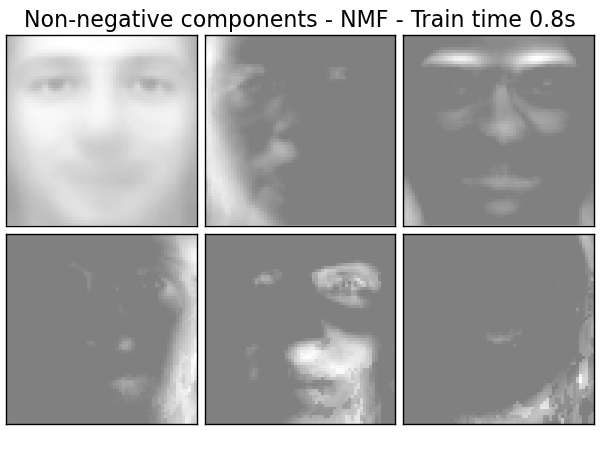

- 8.5. sklearn.decomposition: Matrix Decomposition

- 8.6. sklearn.ensemble: Ensemble Methods

- 8.7. sklearn.feature_extraction: Feature Extraction

- 8.8. sklearn.feature_selection: Feature Selection

- 8.9. sklearn.gaussian_process: Gaussian Processes

- 8.10. sklearn.grid_search: Grid Search

- 8.11. sklearn.hmm: Hidden Markov Models

- 8.12. sklearn.kernel_approximation Kernel Approximation

- 8.13. sklearn.semi_supervised Semi-Supervised Learning

- 8.14. sklearn.lda: Linear Discriminant Analysis

- 8.15. sklearn.linear_model: Generalized Linear Models

- 8.16. sklearn.manifold: Manifold Learning

- 8.17. sklearn.metrics: Metrics

- 8.18. sklearn.mixture: Gaussian Mixture Models

- 8.19. sklearn.multiclass: Multiclass and multilabel classification

- 8.20. sklearn.naive_bayes: Naive Bayes

- 8.21. sklearn.neighbors: Nearest Neighbors

- 8.22. sklearn.pls: Partial Least Squares

- 8.23. sklearn.pipeline: Pipeline

- 8.24. sklearn.preprocessing: Preprocessing and Normalization

- 8.25. sklearn.qda: Quadratic Discriminant Analysis

- 8.26. sklearn.svm: Support Vector Machines

- 8.27. sklearn.tree: Decision Trees

- 8.28. sklearn.utils: Utilities

Example Gallery¶

- Examples

- General examples

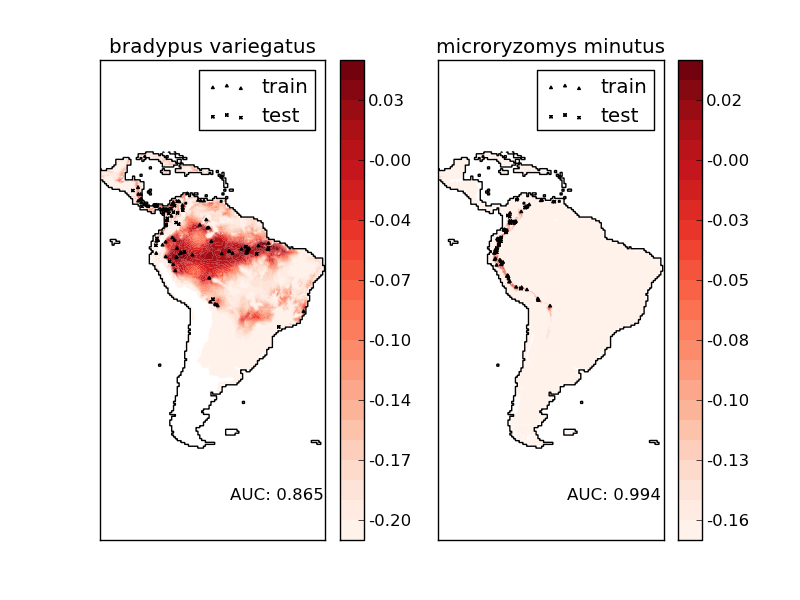

- Examples based on real world datasets

- Clustering

- Covariance estimation

- Decomposition

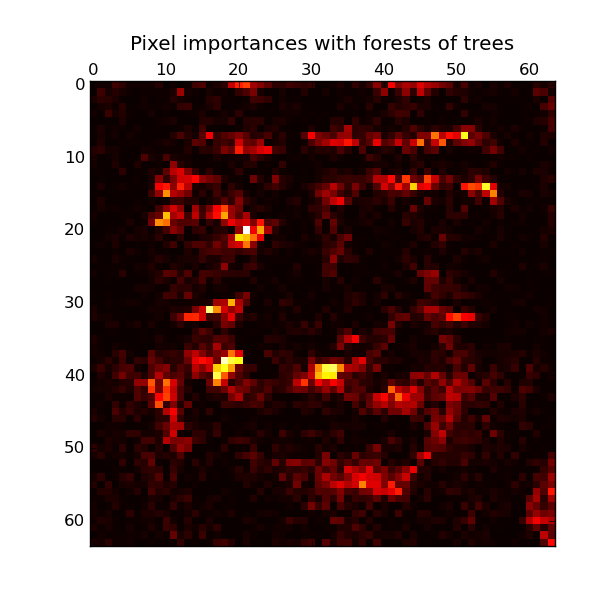

- Ensemble methods

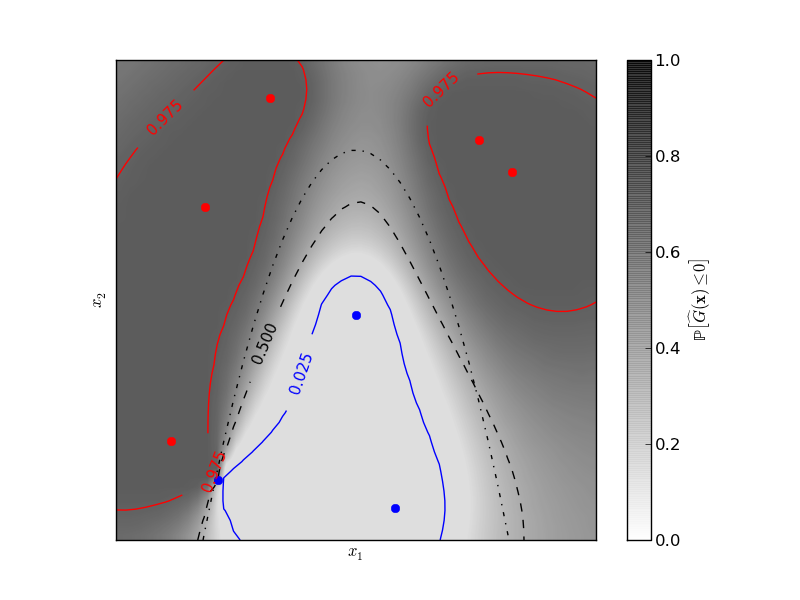

- Gaussian Process for Machine Learning

- Generalized Linear Models

- Manifold learning

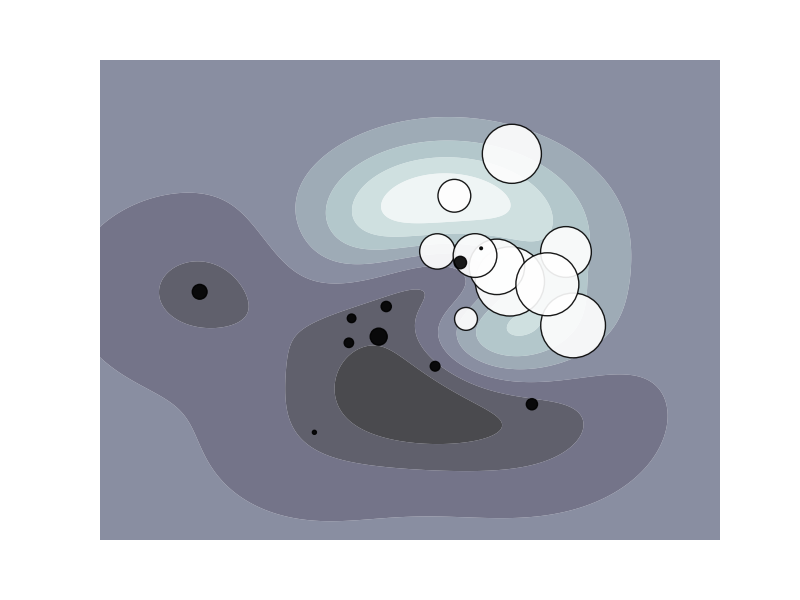

- Gaussian Mixture Models

- Nearest Neighbors

- Semi Supervised Classification

- Support Vector Machines

- Decision Trees